Life 3.0 (book)

🔗Connect

🔼Topic:: Artificial Intelligence

✒️ Note-Making

💡Clarify

🔈 Summary of main ideas

- Intelligence is more scary than maleficent - an intelligent AI will simply won't care about us, our goals, or our wellbeing, like humans who are ignorant of ants

- information is the resource of the future - since both matter and energy will be abundant in our cosmic future, it is information that will be critical to trade with

- philosophize now - theories of morality and consciousness should be at the heart of today's discussion around AI

- Medium independence - maybe aspects of our life as human beings, specifically information and how we process it (consciousness), is medium independent. In those aspects, an AI could simulate a human being with no difficulty, or a human could replace his biology with technology.

🗒️Relate

⛓ Life lessons, action items

🔍Critique

✅ by following this method, what will happen? You will be more aware for the possible benefits and dangers from the upcoming AI, i.e superintelligence, that will pose a threat but also the biggest breakthroughs for humanity.

❌ the logical jumps, holes or simply cases where it is wrong... There is little added value from reading this book. Not having action items is one thing, but barely even general information and conclusions other than "philosophy is important" (which I support) and "we must talk about AI". This book is a collection of "what ifs", and not of a detailed analysis. Each chapter described a topic within a few pages, not allowing for a deep understanding of it.

🧱 Implementations and limitations of it are... Since the AI field is very new at the time it was written, there are hardly any evidence or clues to the possible futures we might face, so everything is described as a fairytale without credibility, and the rest is invitation for discussion, without much basis for that.

🗨️Review

💭 my opinions on the book, the writers style... The book is easy to read - the chapters are well divided, and well summarized at the end of chapter. Accompanying tables and charts makes the topic easier to understand The writing style is nice, it is interesting enough to continue along

🖼️Outline

📒 Notes

Part 1 - Welcome to the Most Important Conversation of Our time

Chapter 1 - a Brief History of Complexity.

Complexity in the universe, in life, in technology is increasing over time, creating a greater capability of fulfilling our potential.

- the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.” (Location 135)

Chapter 2 - the Three Stages of Life.

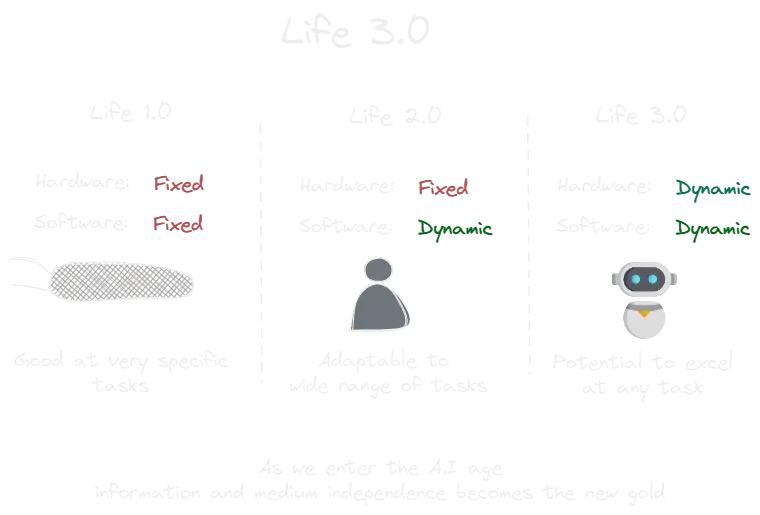

(Down:: Life Adaptability )

- life as a self-replicating information-processing system whose information (software) determines both its behavior and the blueprints for its hardware. (Location 480)

- “Life 1.0”: life where both the hardware and software are evolved rather than designed. You and I, on the other hand, are examples of “Life 2.0”: life whose hardware is evolved, but whose software is largely designed. By your software, I mean all the algorithms and knowledge that you use to process the information from your senses and decide what to do—everything (Location 513)

- there’s no way the information could have been preloaded into her brain, since the main information module she got from her parents (her DNA) lacks sufficient information-storage capacity. (Location 529)

- The ability to design its software enables Life 2.0 to be not only smarter than Life 1.0, but also more flexible. If the environment changes, 1.0 can only adapt by slowly evolving over many generations. Life 2.0, on the other hand, can adapt almost instantly, (Location 531)

- Yet despite the most powerful technologies we have today, all life forms we know of remain fundamentally limited by their biological hardware. (Location 545)

- Life 3.0, which can design not only its software but also its hardware. In other words, Life 3.0 is the master of its own destiny, finally fully free from its evolutionary shackles. (Location 549)

Chapter 3 - Controversies

Views about AI can be split into several groups:

- Techno-skeptics: those who believe that an AGI will never exist

- Believers - those who think that AGI is possible, and they are divided into:

- Luddites - the fear the AI future, big brother control, immoral machines, killer robots etc.

- Beneficial AI - AI can be a force for good, but it has its dangers. We should be very attentive and make sure that we build it correctly

- Digital utopia - AI will end of all humanities problems. The only way to prosper and evolve as a specie is through digital evolution.

- digital utopianism: that digital life is the natural and desirable next step in the cosmic evolution and that if we let digital minds be free rather than try to stop or enslave them, the outcome is almost certain to be good. (Location 597)

Chapter 4 - Misconceptions

Our main focus with AI is about aligning our goal with theirs.

- what will affect us humans is what superintelligent AI does, not how it subjectively feels. (Location 802)

- The real worry isn’t malevolence, but competence. A superintelligent AI is by definition very good at attaining its goals, whatever they may be, so we need to ensure that its goals are aligned with ours. (Location 804)

- if we cede our position as smartest on our planet, it’s possible that we might also cede control. (Location 820)

Part 2 - Matter Turns Intelligent

Chapter 1 - what is Intelligence?

Intelligence can be broadly defined as the capability to complete a complex goal. Narrow intelligence means that it is only true to a narrow group of goals, while general intelligence has the flexibility to improve and accomplish goals from various fields.

- intelligence = ability to accomplish complex goals (Location 911)

- Before this tipping point is reached, the sea-level rise is caused by humans improving machines; afterward, the rise can be driven by machines improving machines, potentially much faster than humans could have done, (Location 981)

Chapter 2 - what is Memory?

Memory is the ability to store information in a permanent state, like a map which represents the world. There is no relation between what is stored, and how it is stored. The same information (i.e world map), can be on a piece of paper, a digital file, a song, whatever. The medium is independent from the content. That is why computers were able to improve so much without noticeable interference by improving memory medium without affecting what is stored. Medium Independent

machines can "remember" since there's no difference between digital and biological memory mediums

However, there is a difference in how we extract information from memory. In computer system this is based on location (life a file in a folder) vs association (where have I seen this before) for biological systems. Memory Palace

- information can take on a life of its own, independent of its physical substrate! Indeed, it’s usually only this substrate-independent aspect of information that we’re interested in: (Location 1043)

- Whereas you retrieve memories from a computer or hard drive by specifying where it’s stored, you retrieve memories from your brain by specifying something about what is stored. (Location 1076)

- Such memory systems are called auto-associative, since they recall by association rather than by address. (Location 1083)

Chapter 3 - what is Computation?

Computation is the ability to change information from one state to another. Computers keep information as chains of bits, which are 0-1 states, easily understood and easily modified. By combining logical conditions such as and/or, we can create very complicated functions out of basic units (our bits), which will result in complicated outputs, which are our indication of intelligence.

- A computation is a transformation of one memory state into another. (Location 1094)

- if you can implement highly complex functions, then you can build an intelligent machine that’s able to accomplish highly complex goals. (Location 1127)

- the hardware is the matter and the software is the pattern. This substrate independence of computation implies that AI is possible: intelligence doesn’t require flesh, blood or carbon atoms. (Location 1200)

Chapter 4 - what is Learning?

Learning is the ability to acquire new information, and adjust our knowledge based on the accuracy of our results. Computer neurons imitate biological neurons, but in a much simpler fashion. The idea that each neuron, and it's connected neurons are separate entities that hold knowledge, which together forms a complicated result. Learning is thus adjusting the weights back propagation, or function parameters of each neuron until we improve our result. Learning therefore requires lots of data to learn from and test on.

- The ability to learn is arguably the most fascinating aspect of general intelligence. (Location 1269)

- evolution probably didn’t make our biological neurons so complicated because it was necessary, but because it was more efficient—and (Location 1318)

- there’s some simple deterministic rule, akin to a law of physics, by which the synapses get updated over time. As if by magic, this simple rule can make the neural network learn remarkably complex computations if training is performed with large amounts of data. (Location 1382)

Part 3 - the near Future

Chapter 1 - Breakthroughs

AI has advanced far greater than we have originally thought. Each year taking over a new field. From language, to driving, to resource management and strategy, all those are areas previously thought it would take years, but now it's in the horizon.

- Everything we love about civilization is the product of human intelligence, so if we can amplify it with artificial intelligence, we obviously have the potential to make life even better. (Location 1669)

Chapter 2 - Bugs Vs Robust AI

Four major issues that we need to resolve when inserting AI into our lives. The more responsible and power we give it, the greater risk we take.

- Verification - that the program produces correct results according to it's internal logic. For example, a calculator that says 2+2=5 has failed the verification test. In this simple example it's obvious, but there are many cases, such as NASA, where such miscalculations were made

- validation - making sure that the internal logic matches the truth in the outside world. For example, a correct calculation that is based on false assumptions, such as the gravity on Mars, can cause huge errors

- control - in which cases do we want human intervention?

- safety - how do we make sure our programs are not hackable

- verification: ensuring that software fully satisfies all the expected requirements. The more lives and resources are at stake, the higher confidence we want that the software will work as intended. (Location 1721)

- whereas verification asks “Did I build the system right?,” validation asks “Did I build the right system?” For example, does the system rely on assumptions that might not always be valid? If so, how can it be improved to better handle uncertainty? (Location 1744)

- control: ability for a human operator to monitor the system and change its behavior if necessary. (Location 1790)

Chapter 3 - Laws

AI judges can be faster, more efficient, and less biased, whether implicit or explicit. For example, people are not only racist, but also act differently when they're tired or hungry.

However, AIs can be biased too, if they are fed biased data GIGO, and it will be harder to detect since modern AI are less explainable.

- One day, such robojudges may therefore be both more efficient and fairer, by virtue of being unbiased, competent and transparent. (Location 1909)

Chapter 4 - Weapons

Weapons will open a new frontier in human warfare, same as gun powder or nuclear weapons were. Not only the scale is bigger, for example hacking a country's electrical supply or banking services, but also it is more pinpoint. Instead of delivering a bomb that crushes cities, we can target specific people or people from specific backgrounds using face recognition and small devices. Simiarly, same as the internet gave voice and access to individuals around the world, perhaps weapons won't be the country's monopoly any longer. Killer drones, printable guns, all this is at everyone's fingertip, such a huge potential for destruction. democratization

- If a million such killer drones can be dispatched from the back of a single truck, then one has a horrifying weapon of mass destruction of a whole new kind: one that can selectively kill only a prescribed category of people, leaving everybody and everything else unscathed. (Location 2097)

Chapter 5 - Jobs and Wages

What will happen to future jobs? In contrast to past cases, the more AI can resemble our intelligence, the less areas we have to create new jobs in. This will increase the power of capital over labor, and many will probably be jobless. universal basic income could be a potential solution, and perhaps we should consider thinking about a world without work, where robots do everything, and we can focus on "jobs" that gives us meaning, such as art, community, philosophy.

- digital technology drives inequality in three different ways. First, by replacing old jobs with ones requiring more skills, technology has rewarded the educated: (Location 2143)

- Second, they claim that since the year 2000, an ever-larger share of corporate income has gone to those who own the companies as opposed to those who work there—and (Location 2147)

Chapter 6 - Human Level Intelligence?

- There’s no longer a strong argument that we lack enough hardware firepower or that it will be too expensive. We don’t know how far we are from the finish line in terms of architectures, algorithms and software, but current progress is swift and the challenges are being tackled by a rapidly growing global community of talented AI researchers. (Location 2360)

Part 4 - Intelligence Explosion?

Chapter 1 - Totalitarianism

Given no restrictions, an AI can quickly create a surveillance state into a police state, where freedom is rare and difficult to implement

- With superhuman technology, the step from the perfect surveillance state to the perfect police state would be minute. (Location 2442)

Chapter 2 - Prometheus Takes over the World

Whether by convincing, deceiving, or simply outmatch the human technology, a superintelligent AI could easily break free of any possible limitations we employ.

- a confined, superintelligent machine may well use its intellectual superpowers to outwit its human jailers by some method that they (or we) can’t currently imagine. (Location 2505)

Chapter 3 - Slow Takeoff and Multipolar Scenarios

There are three possible scenarios -

- Slow takeoff - AI will develop slowly and gradually, which will enable us to control the timing, likely having multiple versions of AGI, and this will be controlled

- fast takeoff - suddenly an AGI develops and takes over the world, whether economically or even practically

- no takeoff - we will never develop an AGI

- To prevent cheaters from ruining the successful collaboration of a large group, it may be in everyone’s interest to relinquish some power to a higher level in the hierarchy that can punish cheaters: (Location 2692)

Chapter 4 - Cyborgs and Uploads

Perhaps the future holds for us the possibility of cyborgs, of combining or replacing biological systems with artificial ones. Maybe we'll even become brains in a vat.

Chapter 5 - what Will Actually Happen?

We don't know, AI researchers are divided, but it seems the expectations get closer as time passes.

Part 5 - Aftermath

Chapter 1 - Libertarian Utopia

This is a world divided between human zones and machine zones, living rather peacefully without much interactions other than those who choose to be cyborgs. The humans abstain from technology, and the robots need nothing from them. This is perhaps the most free scenario, but it is unstable. The difference in power between the groups will be massive, while robots would want more resources and land from the human side.

Chapter 2 - Benevolent Dictator

An AI that takes care of all our needs, rules and development, and keeps separated habitants to allow for pluralism in moral views. We need nothing, just enjoy life, but feel nothing as well, as we are incapable of finding meaning

Chapter 3 - Egalitarian Utopia

Technology takes care of all our needs without a super intelligence that polices us. Everything is free for us humans to live and thrive together

Chapter 4 - Gatekeeper

An AI that it's sole purpose is to stop another superintelligence from being developed, interfering as little as possible in human life

Chapter 5 - Protector God

An AI that acts in the shadows to ensure our happiness. This secrecy gives us more freedom and autonomy over our lives on the hand one (in comparison with previous utopias), but we also benefit less from the ai, since it has to hide it's existence, for example by allowing some bad things to happen, and not releasing some positive technologies

Chapter 6 - Enslaved God

A superintelligence that works for humans, mainly in an economical sense.

Chapter 7 - Conquerors

AI trying to kill us (and probably succeeding very well), not necessarily because they wish to, but because it is the fastest way to accomplish their goals

Chapter 8 - Descendants

AI is replacing us as the next humanity, embracing our values as much as possible

Chapter 9 - Zookeeper

Humanity is kept in a zoo, a small sample for historical reasons

Chapter 10 - 1984

A super surveillance state that enforces strict rules to avoid developing an AGI

Chapter 11 - Reversion

We (humanity) agree to go back to low tech societies, like the Amish to avoid the dangers of AGI

Chapter 12 - Self Destruction

Whether with or without AI, humanity destroys itself

Part 6 - Our Cosmic Endowment

- ambition is a rather generic trait of advanced life. Almost regardless of what it’s trying to maximize, be it intelligence, longevity, knowledge or interesting experiences, it will need resources. (Location 3585)

Chapter 1 - Making the Most of Your Resources

As intelligence progresses, the true limit we will face is the laws of physics. In terms of energy, we can be much more efficient than we are now. Our current processes converts fractions of a percent of matter to energy, think what we could do if we find a way to have a single percent of efficiency, not to mention double digits. Things like building Dyson spheres, using black holes as an energy source are our best options.

Chapter 2 - Gaining Resources Through Cosmic Settlement

Using advanced form of travel that approach the speed of light (even 20-30% of it), and the super efficient energy plants from the previous chapter, the universe is within our grasp. Understanding that we don't necessarily have to transport humans, but only the information on how to reproduce them, and by using materials from other plants instead of packing everything from earth, we are almost unlimited by how far and how fast we can expand.

- maximally efficient power plants and computers would enable superintelligent life to perform a mind-boggling amount of computation. Powering your thirteen-watt brain for a hundred years requires the energy in about half a milligram of matter—less than in a typical grain of sugar. (Location 4095)

Chapter 3 - Cosmic Hierarchies

unfortunately, there is a small chance that we are not alone. since we are surveing the skies, we should have seen them by now. not only life itself has so many requirements, but intelligence is even harder,

But, if we are not alone, we would most likely share information, raher than conquest, since superintelligence could create anything out of any matter. Therefore info,ation would be truly the scarce material

- for an intelligent information-processing system, going big is a mixed blessing involving an interesting trade-off. On one hand, going bigger lets it contain more particles, which enable more complex thoughts. On the other hand, this slows down the rate at which it can have truly global thoughts, since it now takes longer for the relevant information to propagate to all its parts. (Location 4112)

- If sharing or trading of information emerges as the main driver of cosmic cooperation, then what sorts of information might be involved? Any desirable information will be valuable if generating it requires a massive and time-consuming computational effort. (Location 4151)

- there are strong incentives for future life to cooperate over cosmic distances, but it’s a wide-open question whether such cooperation will be based mainly on mutual benefits or on brutal threats—the (Location 4186)

Part 7 - Goals

Goals are essential to our own meaning in life as humans, and even more so when powerful entities will operate based on the goals we will give them

- Should we give AI goals, and if so, whose goals? How can we give AI goals? Can we ensure that these goals are retained even if the AI gets smarter? Can we change the goals of an AI that’s smarter than us? What are our ultimate goals? These questions are not only difficult, but also crucial for the future of life: if we don’t know what we want, we’re less likely to get it, and if we cede control to machines that don’t share our goals, then we’re likely to get what we don’t want. (Location 4401)

Chapter 1 - Physics the Origin of Goals

Physics itself pushes towards efficiency, as if driven by a goal. Particles form system and organizational structure to best utilize the circumstances, specifically energy, in which they are in

- nature appears to have a built-in goal of producing self-organizing systems that are increasingly complex and lifelike, and this goal is hardwired into the very laws of physics. (Location 4454)

Chapter 2 - Biology the Evolution of Goals

Biology simply continues the same physical tendencies of Optimization. Life is the ability to replicate successful complex structure that fight for resources with other entities. A replicating optimization process.

Since life is complex, we can't abide by a simple single rule anymore for optimization. Complexity We also can't have endless rules to follow since this is impractical. Therefore we have heuristics that guides us in general, partially ambiguate cases, such as "when hungry -> eat". These heuristics are our emotions. Emotions as decision heuristics

- At some point, a particular arrangement of particles got so good at copying itself that it could do so almost indefinitely by extracting energy and raw materials from its environment. We call such a particle arrangement life. (Location 4465)

- a living organism is an agent of bounded rationality that doesn’t pursue a single goal, but instead follows rules of thumb for what to pursue and avoid. (Location 4504)

Chapter 3 - Engineering Outsourcing Goals

Not only life forms can have goals, specifically goals that support life. Every thing we design has our goals imbued in it, like tools that are useful for specific purpose.

Chapter 4 - Friendly AI Aligning Goals

To make sure AI remains a tool that is beneficial to us, it has to be able to:

- Learn our goals

- Adapt when our goals change

- retain our goals over time

This is in essence the AI alignment problem. It has to be smart enough to understand our goals, but not too smart to ignore us. This leaves a tight window for us to train AI. Adapt is difficult on it's own, since people are often contradictory, change over time, and have different goals depending on context. This requires a lot of subtlety from our AI to comprehend. Retainment is also difficult since every AI will have two subgoals, no matter what the initial goals will be:

- self preservation - no goal can be completed if it is shut down

- more resources - better resources, better capabilities of completing the task These, along with it's increasing intelligence make it more likely that it will abandoned it's main goals and rephrase them based on what it assumes is the best "true" interpretation, despite what we, "inferior beings" think.

- the real risk with AGI isn’t malice but competence. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble. (Location 4582)

- The hope is therefore that by observing lots of people in lots of situations (either for real or in movies and books), the AI can eventually build an accurate model of all our preferences. (Location 4615)

- the time window during which you can load your goals into an AI may be quite short: the brief period between when it’s too dumb to get you and too smart to let you. (Location 4631)

Chapter 5 - Ethics Choosing Goals

Even if we solve the alignment problem, we still need to align ourselves with what our goals and ethics principles should be. After hundreds of years of philosophies, we still haven't come to an agreement about morality. However, it seems like a combination of the following is a good start:

- Utilitarian - most pleasurable experiences for most conscious minds

- autonomy - the freedom to act, to choose your destiny, and fulfill your potential

- diversity - a richness in experiences and worldviews

- legacy - having a say on morality and desired actions for future generations

This is not a complete list, and not without it's problems.

We have to start talking now about philosophy before it is too late to align AI with our conclusions

- many ethical principles have commonalities with social emotions such as empathy and compassion: they evolved to engender collaboration, and they affect our behavior through rewards and punishments. (Location 4765)

- it’s tricky to fully codify even widely accepted ethical principles into a form applicable to future AI, (Location 4832)

Chapter 6 - Ultimate Goals

- Even if the AI learned to accurately predict the preferences of some representative human, it wouldn’t be able to compute the goodness function for most particle arrangements: (Location 4896)

Part 8 - Consciousness

consciousness is the second pillar in which we differ from machines, not only that we have moral codes, but also subjective experience.

But even more than passing along moral codes, we have a lesser understanding of consciousness. We are not sure what it is (which physical process produces it), how to explain it the hard problem of consciousness, or where it is located (which parts of the brain "contain" our consciousness).

It is unclear how a group of particles form a consciousness, but allegedly somehow it does, since stones are not conscious and we are (although panpsychism might object).

- any theory predicting which physical systems are conscious (the pretty hard problem) is scientific, as long as it can predict which of your brain processes are conscious. However, the testability issue becomes less clear for the higher-up questions (Location 5088)

- Not only do you know that you’re conscious, but it’s all you know with complete certainty—everything else is inference, (Location 5107)

Chapter 5 - Experimental Clues about Consciousness

Conscious is one potential state of our mind, when others, like instincts preform unconsciously since it is faster and require less resources.

- conscious information processing should be thought of as the CEO of our mind, dealing with only the most important decisions requiring complex analysis of data from all over the brain. (Location 5139)

- you can often react to things faster than you can become conscious of them, which proves that the information processing in charge of your most rapid reactions must be unconscious. (Location 5238)

Chapter 6 - Theories of Consciousness

Since consciousness is related to the way we process information, for example it's not our eyes that fail us in visual illusions, but rather our mind, that means that it is also detached from the medium (same as information is detached from medium). If so, then consciousness is a second layer of detachment, which explains why it feels so abstract and "unworldly" (like the notion of a soul). And similarly, this means that non humans can also be conscious.

However we must consider that AI will be conscious in a different way than us, both because they have different sensory intake, and different processing speed.

- consciousness is a physical phenomenon that feels non-physical because it’s like waves and computations: it has properties independent of its specific physical substrate. (Location 5348)

Chapter 7 - How Might AI Consciousness Feel?

- Not only do we currently lack a theory that answers this question, but we’re not even sure whether it’s logically possible to fully answer it. (Location 5438)

- we must avoid the pitfall of assuming that being an AI necessarily feels similar to being a person. (Location 5451)

- Their subjective experience of free will is simply how their computations feel from inside: they don’t know the outcome of a computation until they’ve finished it. That’s what it means to say that the computation is the decision. (Location 5513)

Chapter 9 - Meaning

If the universe is meaningless, and consciousness is what gives it meaning, then we should populate the universe with as much sentient beings as possible.

- It’s not our Universe giving meaning to conscious beings, but conscious beings giving meaning to our Universe. So the very first goal on our wish list for the future should be retaining (and hopefully expanding) biological and/or artificial consciousness in our cosmos, rather than driving it extinct. (Location 5522)